Equality AI Studio & EqualityML

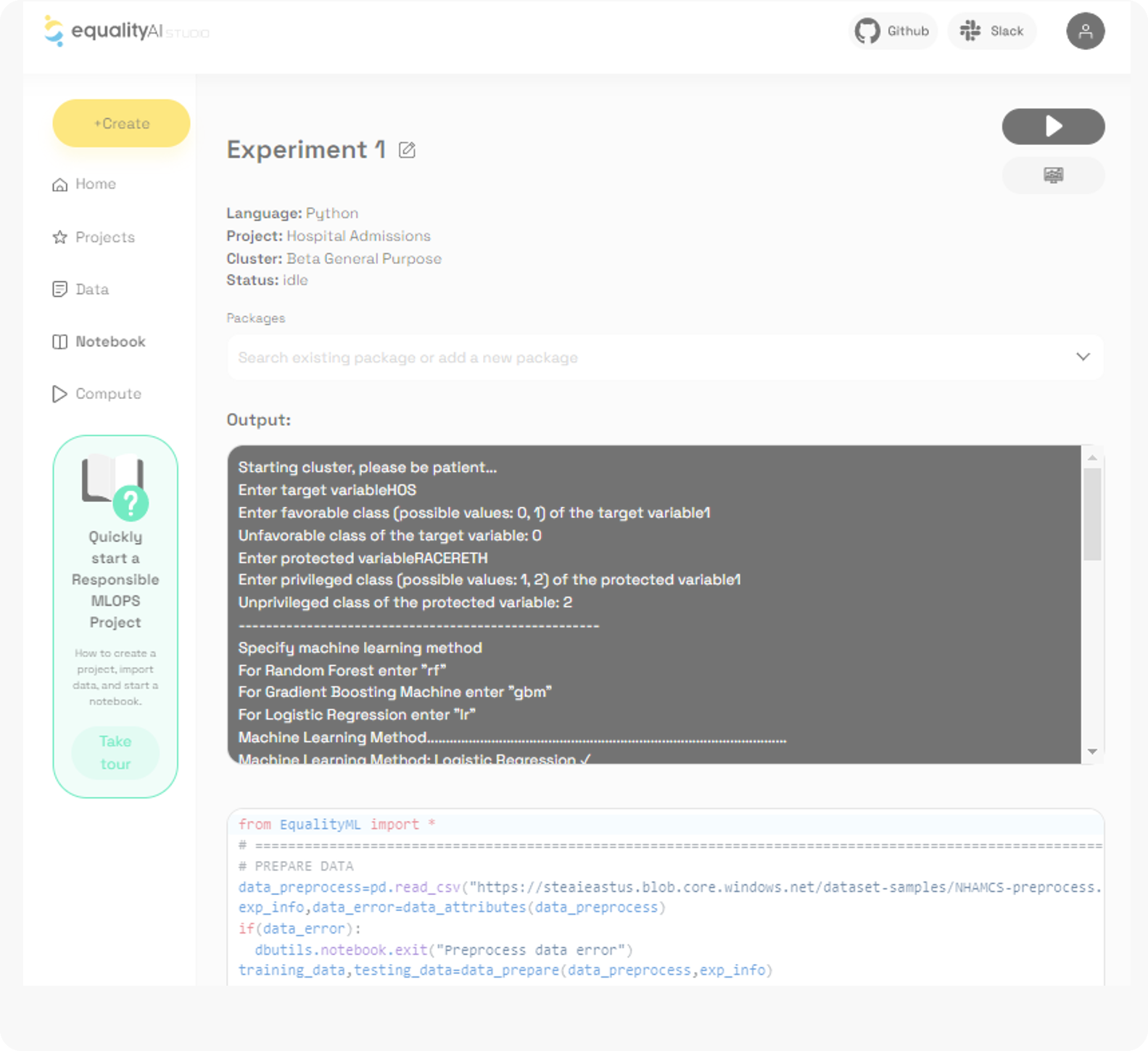

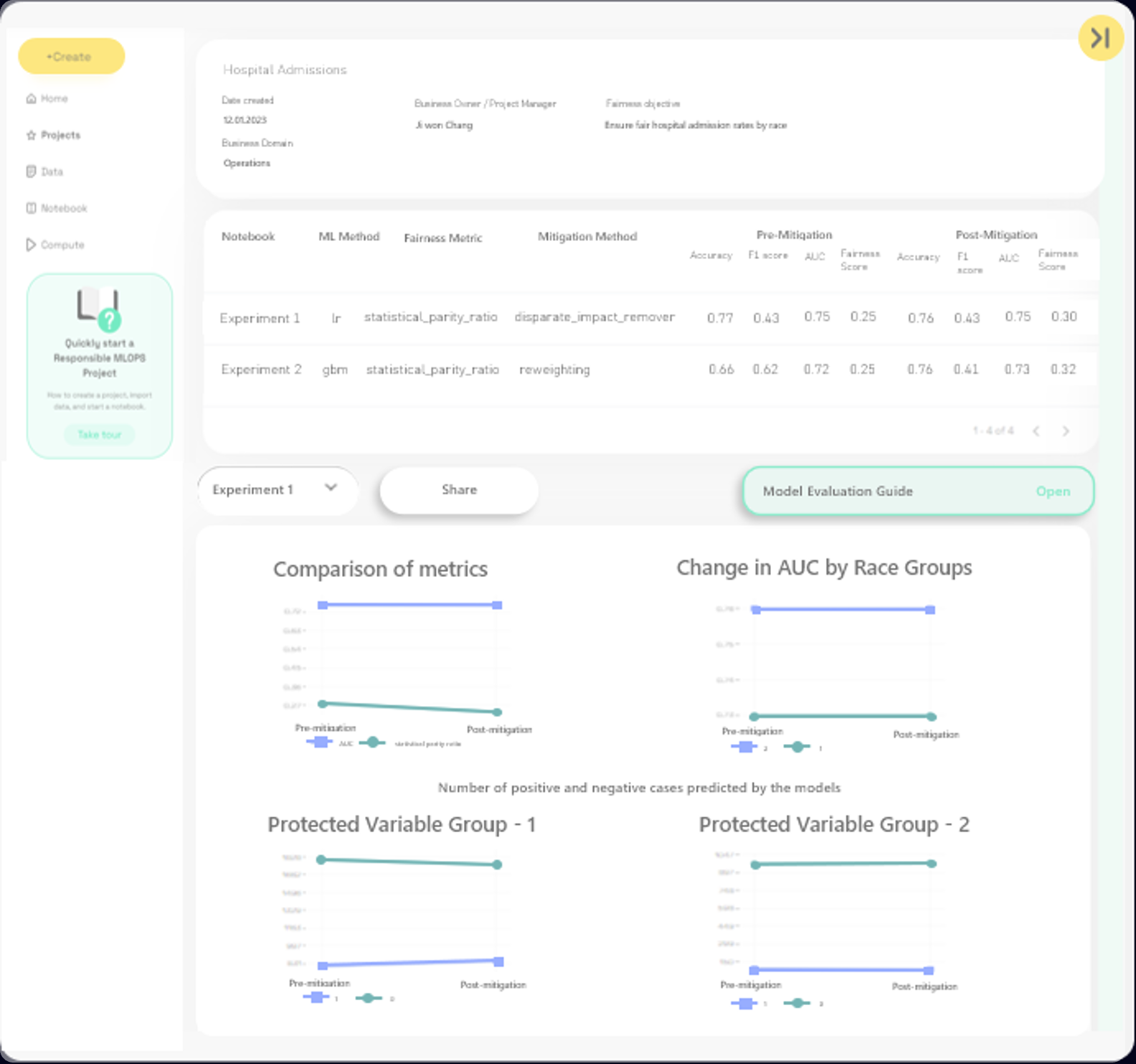

Experience the potential of Human and Machine Intelligence with evidence-based fairness practices in MLOps

Experience the potential of Human and Machine Intelligence with evidence-based fairness practices in MLOps

One platform for all users

Opensource python packages and Fair ML Learning